Augmented and virtual reality are storming every industry, and libraries are no exception. But other forms of immersive technologies should also be considered. Fast- moving, with fascinating things on the horizon, they need only the imagination and creativity of information professionals to flourish in libraries.

Immersive technologies imitate or enhance our physical world via digital simulations to give us the sense of being completely absorbed into something or having content extended over our own reality. Extended reality (XR) describes the assortment of immersive technologies that are available today. The X is a variable that now includes 360° imagery, virtual reality (VR), augmented reality (AR), mixed reality (MR), and spatial computing. They offer some aspect of digital technology that blends into our physical world. Adding immersive technologies to the long and growing list of experiences that libraries and other educational institutions offer gives users opportunities to engage with these technologies. It opens their minds to recognize what is possible today and gives them a glimpse into how humanity will one day interact with computers.

Many universities have programs that research and develop for XR: Stanford’s Virtual Human Interaction Lab, University of Southern California’s Computer Graphics and Immersive Technologies Lab, Iowa State Virtual Reality Applications Center, Teesside University’s Intelligent Virtual Environments Lab, and Liverpool John Moores University’s Immersive Storylab among them. PennImmersive, a University of Pennsylvania research project, is exploring the potential of XR in teaching, research, and learning. More institutions are starting to focus on these technologies since there is more consumer awareness and subsequently a new generation of knowledge being created as a result. PennImmersive challenges the library staff to develop services and practices that support new forms of scholarship and teaching. This is vital now and will be more so in the near future.

XR has the potential to bring people, places, and experiences closer together. XR reifies our perception of virtual objects to appear real, which explains why, in many cases, virtual reality can treat phobias and other ailments. For example, a partially blind student visiting the Innovation Lab at the St. Petersburg College Seminole Campus tried VR for the first time in early 2020. Initially, the student did not think it would work on him, but he wanted to try it anyway and was so excited to see more vividly. The VR experience helped increase his peripheral vision to his functioning eye, and it allowed him to paint in 3D using Google’s Tilt Brush application.

Still, it is important to consider the potential dangers of XR, as the boundaries of our physical and digital realities start to blur. Science-fiction films such as eXistenZ, The Matrix, Lawnmower Man , Play, and many others have depicted people who are unable to distinguish their physical world from their digital world. A tough question to consider is whether or not horrendous behaviors, such as rape, torture, and criminal sexual misconduct, if happening inside an immersive environment, should be considered illegal since the behavior is not necessarily occurring in the real world. Luckily, there are several institutions conducting important research on these technology, legal, and philosophical developments.

360° Imagery

360° panorama photographs and videos are starting to be come widely used thanks to integrated gyroscopes, VR, and other emerging technologies. Developing 360° experiences is not difficult and can create a sense of immersion into your web presence that can provide a realistic visibility by highlighting an institution’s resources and services.

Most smartphones can create 360° photographs using apps such as Google Street View, but it’s a tedious process. However, a 360° camera, with the press of a button, can capture two images or video files from dual lenses, each with a 180° field of view. 360° cameras automatically stitch panoramic images together inside the camera or after the shot is captured via the companion software, creating one cohesive image. The companion software also includes exposure controls, self-timers, ways to live-preview the scene, options to share content and/or live-stream, and other functions.

With YouTube and Facebook embracing 360° video and images, people are beginning to realize the full potential of this technology. Real estate brokers, hotels, and libraries are using 360° imagery to give people immersive tours of their homes for sale and buildings. Applications such as Matterport can connect to 360 ° cameras to capture 3D scans of entire rooms, adding more depth and detail to virtual tours. South Huntington Public Library in New York did a 360° tour that gives users an opportunity to explore the library as if they are physically there (shpl.info). You can also view the building in dollhouse or blueprint mode, with a measurement tool included, to get a detailed idea of the size of each room.

360° photographs and video are the most basic and probably the most familiar immersive technologies available right now. 360° VR content allows mobile device users to explore content by looking in any direction. However, that content does not consider depth—everything is always the same distance from the user’s eyes. 360° technologies use three degrees of freedom (3DoF), meaning an experience in this space is seen left or right (yaw), up or down (pitch), and side-to-side (roll). With 3DoF, 360° content will move with users as they move their head be cause the image was captured from one fixed vantage point.

There are two types of 360° images: monoscopic and stereoscopic. Monoscopic images are flat, with no real sense of depth perception. Google Street View is a good example of this type of image. Stereoscopic imagery utilizes two different images for each eye to add depth perception; this is how VR works. YouTube’s Anaglyph 3D mode utilizes stereoscopic imagery with two images superimposed using two different colors, producing a stereo effect when the video is viewed through the old-fashioned red and cyan paper 3D glasses. It presents an immersive effect similar to a 3D movie.

English Composition and American History students from St. Petersburg College have used Roundme (roundme.com), an application that creates 360° tours, for an alternative research project. The student group(s) check out a 360° camera and capture a few scenes, then use Roundme to annotate certain parts of their image to tell a story. Students also have the option to add spatial audio to produce a true sense of realism. With WebVR, users would not need to use their computer’s mouse or to touch a screen to navigate the 360° scene. Instead, their view is redirected thanks to the gyroscopic sensor inside their device, so when browsing a 360° scene in VR, all the interactive elements (hotspots, portals, sounds) are initiated when you look at those elements. Although not created using Roundme, Rebuilding Notre Dame is a fantastic example of a well-designed 360° VR documentary experience.

Virtual Reality

Essentially, VR is a computer tricking users’ brains into believing that they are in a different environment. It is a computer simulation. Headsets using six degrees of freedom (6DoF) tracking offer whole-room VR experiences, giving users a more natural freedom to explore locations, interact with objects, and to dodge virtual bullets inside a game. With 6DoF, a user has the same rotational movements as 3DoF, but now has positional functions to move forward/backward (surge), up/ down (heave), and left/right (sway) inside VR space. This is also referred to as room scale or positional tracking. VR is stereoscopic, so when viewing something inside VR, a user can move closer to an object or move further away, and the scene adjusts because there is depth.

|

| Presenting at Synapse Summit using virtual reality (VR) in New York City |

|

| Participants at Synapse Summit in Tampa, Fla. |

|

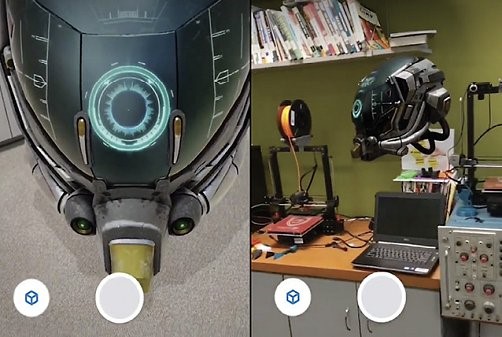

| The process of embedding 3D content in physical space |

VR is not new. In 1962, Morton Heilig, a cinematographer, introduced the Sensorama that included a stereoscopic display, fans, odor emitters, stereo speakers, and a moving chair. Today, we are starting to see multi-sensory mask add-ons for VR headsets that include scent generators (aroma packets), tactile sensations (water, mist, heat, wind), vibration (haptics), and gustation (artificial flavoring) to help stimulate the five senses while a user is inside VR. Looking forward, eye tracking will start to become a major component in VR as well. Eye tracking is complex because eyes move very fast and can look at many things simultaneously. Adding this to new VR headsets will provide amazingly real experiences. Tools that mirror a user’s eye movements will allow social 3D avatars to be more engaging through eye contact.

Historically, VR tends to be more isolating than other technologies. Although not VR, the Community Virtual Library has been operating in Second Life since 2006. This virtual library was a collaborative effort by librarians worldwide. Rumii, created by Doghead Simulations (dogheadsimulations.com), a “social-virtual reality platform that enables people to educate and collaborate in a 3D virtual environment from anywhere on earth,” is the future of collaboration. Collaborating in this space is appealing particularly when multiple users can easily interact with 3D objects, watch video, present documents, listen to music, stream their computer screens, have conversations with hand gestures, and more—all inside VR. At the 2020 Synapse Summit in Tampa, Fla., a VR panel discussion occurred inside VR. I presented from my New York City hotel room. To attendees, who were scattered across the United States, it felt like I was physically there. Face book Horizon, Bigscreen, and other social VR applications are poised to change social media forever.

In 2019, Google created its largest photogrammetry (the science of making a 3D model from photographs) capture for a detailed VR tour of Versailles. Close to 400,000 square feet (more than 4TB of data and textured 15 billion pixels) was captured, allowing users to interact closely with more than a hundred sculptures, paintings, and other works of art. Of course, a virtual tour of Versailles is not the same as being there, but with photogrammetry techniques—and light field imagery and volumetric video on the horizon—this technology is close to giving VR users a photo-realistic experience.

Although creating VR and other XR applications is beyond the scope of this article, a quick way to get a basic idea of how to work within this space is inside an application called Co Spaces Edu (cospaces.io). The Innovation Lab at St. Peters burg College uses CoSpaces to demonstrate how to build in 3D space while adding interactions with familiar block-based coding and/or advanced scripting.

CoSpaces resembles a course management system for VR/AR creation. It allows instructors to assign projects and view them all via WebVR-compliant browsers, mobile devices, and/or within more sophisticated VR equipment. An integrated library of characters, shapes, 3D environments, and other objects is included. Personal 3D scanned objects and other files can be uploaded into the application. CoSpaces includes an option to develop for the Merge Cube (mergeedu.com/cube), which allows a user to create content for six sides of the cube and to hold the final holographic product to interact with it in a physical space. A series of workshops is continuously offered, which give students opportunities to learn new ways to tell an immersive story.

Augmented Reality

AR enhances one’s reality by superimposing digital content onto the user’s real world; it works with a camera- ;equipped device with AR software installed. When users point their de vice toward an object, the software recognizes it through computer vision technology, which then analyzes the image to display the augmented object on the user’s screen. The digital content, however, does not know that there are physical objects in the room too, so there is no interaction. Users are not isolated in a simulated environment, as with VR; instead, they can see their world with the digital objects in it. One downfall of AR, though, is that digital content is stuck within the camera’s view, so an object will not disappear behind a real-world object.

As Google describes it, ARCore, an Android AR software developer kit (SDK), includes motion tracking to find a de vice’s location, uses the camera to detect flat surfaces, and estimates light paths to help cast shadows on digital objects so they seem to be real. ARKit is Apple’s AR SDK for iOS devices. Google has released an experimental web browser using ARCore and ARKit that allows users to view 3D objects in their physical space.

Library research and classroom learning are going to evolve. Students will be able to 3D-scan objects or have pre-scanned objects, such as a sculpture for a humanities course, to embed into their research paper or presentation slides and bring them into the classroom space so everyone with the appropriate technology can view the life-size object in 3D. For instance, the Laocoön and His Sons sculpture is around 6 feet tall, so seeing it as that exact size in a classroom is better than looking at a small picture of it in a textbook. Of course, the best experience is actually being physically in front of this sculpture, but since not everyone can afford to travel abroad, immersive technologies can help enhance learning. If the student is actually there, AR can enhance the physical object, too, by superimposing information over various parts of the sculpture.

The Leepa-Rattner Museum of Art’s Comin’ Back to Me: The Music and Spirit of ’69 exhibit highlighted the music and art of one of Jefferson Airplane’s founding members, Marty Balin. For part of the exhibit, the museum created an AR section showcasing seminal recordings from 1969. Ten vinyl records were on display with two nearby iPads connected to the internet running AR software. When a museum visitor picked up the record and placed it behind the iPad, a video of that band performing live jumped off the record and onto the display for many to enjoy.

AR will be able to make things invisible and vice versa. For example, drivers wearing AR glasses will be able to see through their automobiles, so there will be no more blind spots while driving. Researchers have been experimenting with smart contact lenses, so when someone is wearing them, that individual will be connected to the internet to then gain access, almost telepathically, to billions of Internet of Thing (IoT) devices, where they can interact with them on multiple levels. Eventually, employees will log in to their smart glasses or via their smart contact lenses to have their AR workspace appear.

A telepresence meeting will be scheduled via Spatial (spatial.io), an AR collaborative application that exists today, in which a group of colleagues from multiple locations can interact as holograms with full-scale 3D designs or other objects. Attendees are able to pin notes to the wall for everyone to see and comment on. Virtual screens with webcams or videos can be moved anywhere in the room with a glance or a simple hand swipe. Imagine doing a presentation or per forming music with the teleprompter or sheet music displayed directly on your AR lenses or wearing smart glasses like Google Glass, which utilizes computer vision and other advanced machine learning capabilities, to make it possible to translate signs, menus, and similar items in real time from one language to another. Smartphones, watches, tablets, and desktop computers will not be necessary since the computer interface will be everywhere and the processing will happen in the cloud with high-speed 5G networks.

“A Taxonomy of Mixed Reality Visual Displays,” written in 1994 by Paul Milgram and Fumio Kishino, discusses the potential merging of real and virtual worlds (IEICE Transactions on Information Systems, vol. E77-D, no.12, December 1994; tinyurl.com/wsgbatx). One day, it will be possible to explore the world using, for example, Google Earth VR, teleporting via Street View, similar to Star Trek characters on the transporter deck, then toggling on “AR Mode” to interact with people live on the street who are wearing AR glasses. Popular VR headsets are now using “Passthrough AR,” which is allowing users to go beyond their VR boundaries to interact with their physical reality and vice versa.

AR can increase the accessibility of public spaces and help people with low vision overcome limitations by providing an overlaid piece of information over a physical object to offer more information about their environment. Someone who has difficulty reading small print can touch their screen that is viewing, for example, a street sign, to request a larger font displayed on their smart device in real time.