|

FEATURE

Lingua Franca: How We Used Analytics to Describe Databases in Student Speak

by Susan Gardner Archambault, Jennifer Masunaga, and Kathryn Ryan

| Students considerably preferred the new style over the old because it had less text and was clearer and more to the point. |

Publisher descriptions of library databases are often long and complex, and they don’t conform to students’ mental models. Novice student researchers lack a big-picture understanding of research, and they may find the jargon used by database producers to be a major barrier. This can unintentionally cause extraneous cognitive load for students who encounter these database descriptions on a libguide. To address this problem, librarians at Loyola Marymount University’s (LMU) William H. Hannon Library combined data from established libguides best practices with student vocabulary mined from our own reference chat transcripts to design a new format for database descriptions that is more student-friendly. This article discusses the process for developing and testing the new format for database descriptions, as well as how it was implemented across all libguides. Best Practices and Student Language

The libguides best practices literature asserts that because students naturally skim websites, it’s a good idea to limit the amount of text on a page and break text into smaller chunks. It is also wise to minimize clutter on the pages, increase white space, and reduce library jargon and other confusing terminology. Furthermore, students who read database descriptions on a libguide take them literally; for example, they skim the description for terms such as “full text” and “articles.”

To get a better sense of what words students seek out in a database description, we analyzed the vocabulary that LMU students use to talk about research in our online Ask a Librarian chat conversations. To extract this, we isolated the student side of our chat transcripts as a distinct corpus to capture word frequencies across six semesters using Lexos (wheatoncollege.edu/academics/special-projects-initiatives/lexomics/lexos-installers) and Voyant (voyant-tools.org), two open source web-based text analysis tools. We also performed a content analysis on the chat transcripts by using the Topic Modeling Tool (github.com/senderle/topic-modeling-tool), which is a point-and-click resource for creating and analyzing topic models produced by MALLET. It uses an algorithm to identify frequent topics or clusters of related words. For more information on the methodology used to perform these analyses, see the Algorithmic Accountability, AI, Transparency, & Text Analysis Assessment Panel slides from the 2018 Computers in Libraries conference (works.bepress.com/susan_gardner/28).

There were some key findings from our analyses that we wanted to incorporate into our new database-description format. We discovered that the top research terms used by students during chat sessions were as follows:

- Article (5,039 times)

- Book (3,644 times)

- Journal (1,506 times)

- Database (1,304 times)

- Scholarly (539 times)

- Primary (530 times)

- Ebook (346 times)

- Peer review (247 times)

- Newspaper/news (222 times)

- Chapter (141 times)

- Popular (98 times)

We made sure to include these terms in our new formula for rewriting the database descriptions. We discovered from the chat content analysis that students often referred to the database by vendor name rather than its real name (e.g., EBSCOhost rather than Academic Search Complete), so we included the vendor name in our new description format. The content analysis further revealed many questions about how to find the full text and how to find the best database for a given topic, so we also incorporated these into the new format.

Proposed New Database Description Format Proposed New Database Description Format

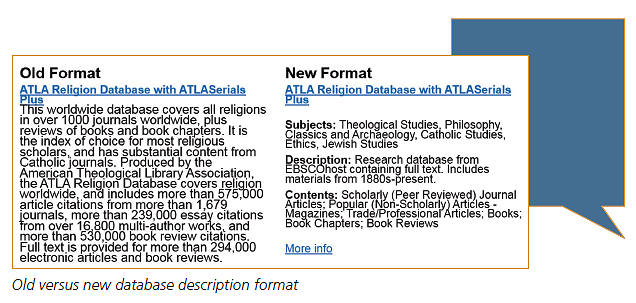

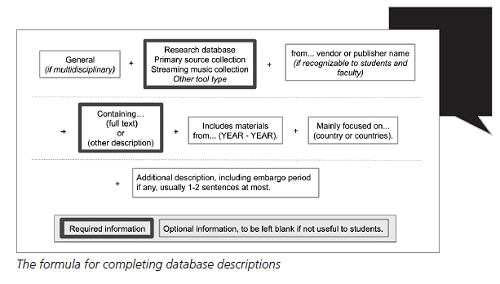

Recognizing that students skim quickly, the new format breaks down the database description into shorter chunks of information with three separate sections rather than putting it all into one long paragraph like the old description format from the publisher. The first section is for Subjects, including the most prominent subjects covered in the database based on LMU majors and minors. The second section is for Description, and it includes things such as whether there is the full text, the tool type, the vendor name, the time period of the content, and the geographic area of focus. The last section is for Content, and it lists the prominent content types from a fixed list based on student-friendly terminology.

Usability Study Overview

To test our new database description format in libguides, we used Intercept Task-Based Usability Testing. This involves recruiting volunteers on-the-fly; no pre-testing or sorting of testers is necessary. There are a few benefits to the intercept method—it involves less formal preparation of test subjects, and it can provide a more authentic user experience. It can also be done as low-tech as necessary using only a facilitator and the test website. However, facilitators have less control over their test population and the length of time testing takes.

Research Questions

- Is there a significant difference in the success rate of students who use database descriptions written in a single paragraph format versus students who use database descriptions distributed across three categories labeled description, subjects, and contents?

- Is there a significant difference in student preferences for database descriptions written in a single paragraph format versus database descriptions distributed across three categories labeled description, subjects, and contents?

In preparation for the usability study, we created a near duplicate copy of our Theological Studies libguide and swapped the old database descriptions for ones written in the proposed new style. Nothing else was altered in the libguide, and we used live database links. Our setup involved a table and chairs placed in the middle of our library’s Information Commons. From this location, we recruited 10 volunteer undergraduate students in the immediate area and incentivized their participation with Starbucks gift cards. Each session took around 5–7 minutes, and we recorded it (with permission) using Camtasia software (it captured both screen and audio). The test involved one facilitator and one note taker, although these could be combined into one role if necessary. Students completed two tasks and used the Think Aloud method, which involves users describing their intentions, thoughts, and opinions as they maneuver the libguide.

Task 1

You are writing a paper on Buddhism that requires two peer-reviewed articles. Use the theology libguide to help you find two databases with peer-reviewed articles on Buddhism. Name the two databases that you found.

For Task 1, we assigned our 10 participants to one of two groups. The five participants in Group A used the modified Theological Studies Usability Testing libguide with new database descriptions to complete the task. The five participants in Group B used the existing Theological Studies libguide with database descriptions written in the original long paragraph format. We recorded the following variables for each participant: time on task, success rate, and number of wrong paths.

Task 2

Complete a Likert scale question regarding ease of use of Style A database descriptions versus Style B. Any additional verbal comments were recorded.

For Task 2, all 10 participants were given two Likert scale questions asking them to rate their level of agreement with a statement about the ease of use for the database description in the new format compared to the old format.

Performance Results

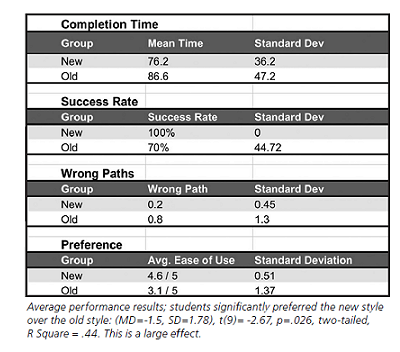

Participants who completed Task 1 using the database descriptions written in the new format finished approximately 13% faster. They also had a higher success rate when completing the tasks compared to students using the old format, and they had fewer wrong paths. With only five participants in each group, the statistical power was not large enough for this to be statistically significant. Students considerably preferred the new style over the old because it had less text and was clearer and more to the point. Since there were 10 participants in this second task, the power was large enough to prove statistically significant using the t-test for two dependent samples. In comments, students admitted they read quickly and want the fastest scannable option, so the shorter descriptions more closely matched their research style.

Student Comments

“I like style A because it has, like, less text. … Like when you do Powerpoints (sp) and stuff, you don’t want to do text, like lots of text-heavy [things] because it kind of scares away the people, and [here] you can see just exactly what you need.”

“As college students, we’re, like, always looking for the fastest way to get information, so I would think these subsections like subjects, description, content just make it easier—for everything to be laid out.”

“I would be more likely to read it [Style A]. … I mean, this one [Style B] isn’t bad, but usually when I’m searching, I’m just, like, stressed out and read it quick.”

Implementing the New Format

Once we decided to change the format of our database descriptions, we needed to find a way to efficiently make changes to about 295 database descriptions in libguides. With only eight staffers in the reference department, who were not subject specialists in all of the databases’ content, we reached out for help from liaison librarians throughout the library. We assigned each librarian about 10 databases (a few more if subject specialties were bigger). We assigned a few reference librarians with larger disciplines 20–30 databases.

In order to make the process of writing a database description as easy as possible and have some control over the order and vocabulary being used, we created a Qualtrics form to walk liaisons through a series of questions. For the Subjects field, librarians chose from a customized list of majors and minors and graduate programs at LMU that they felt were most prominent in the database. They tried to restrict these subjects to four or fewer to limit student overload, and if the database had more than four, they used “many” instead.

The Description section required more questions in Qualtrics, using a combination of multiple choice and short answer. We tried to break down the various aspects of the description into parts. Some were multiple-choice questions about, for example, the database publisher if its name was recognizable (EBSCOhost, ProQuest), whether the database was a genuinely multidisciplinary database, or what type of content it contained (full text, abstract, video). Other short-answer

questions tried to fill in any other useful information for students, including the time period covered by the materials, specific geographic areas, whether there was any embargo, and any other useful descriptive information (such as specialties of the database).

A customized multiple-choice fixed list was used for the Contents section of the database description based on frequent student vocabulary. Librarians could choose up to five content types, including ebooks, scholarly (peer-reviewed) journal articles, popular (non-scholarly) articles, newspapers, and primary sources. A customized multiple-choice fixed list was used for the Contents section of the database description based on frequent student vocabulary. Librarians could choose up to five content types, including ebooks, scholarly (peer-reviewed) journal articles, popular (non-scholarly) articles, newspapers, and primary sources.

Training the Liaisons

We invited librarians who were helping to build descriptions to one of two workshops for training, with lunch provided at the end of the workshop. At the event, they received a print version of the Qualtrics questionnaire to help them think through their responses and work them out on paper first if that was helpful to them. Librarians also received a few examples of completed descriptions to help them picture the final product. Then, they had time to write some descriptions during the workshop. Librarians completed about 50 database descriptions during each of the workshops (90 minutes each), and they received a due date for all submissions 2 weeks later in order to complete any remaining databases on their list.

Once the librarians completed each Qualtrics survey for each database they were assigned, we exported those results from Qualtrics as an Excel file. That data was copied into an Excel spreadsheet with formulas built in to “stitch” the survey entries together into phrases that would make sense in the final description. For example, in the Description section, we wanted to translate a series of survey entries such as “Is this multidisciplinary? Yes,” “Publisher: ProQuest.” “Full Text: Yes,” and “Time Period: 1700–1850” into a phrase like “Multidisciplinary research database from ProQuest containing full text. Includes materials from 1700–1850.”

Behind the Scenes

Before all the librarians submitted their descriptions, we did some preliminary testing of the spreadsheet formulas by inputting some descriptions within the reference department. Because the Excel formulas concatenated several fields together, sometimes it was necessary to add spaces or commas or to change capitalization choices to make sentences grammatically correct. It was necessary to make some more tweaks as librarians outside of reference started using the form—because the reference department had worked on building the questions and the descriptions so much, we found we had some internal assumptions that we had forgotten to share with the rest of the creators. As much as possible, we changed question prompts in the Qualtrics form or edited the formulas in the spreadsheet to solve these issues. Once the descriptions were generated in Excel, the reference department reviewed the descriptions in batches to remove any lingering issues such as extra spaces, missing phrases, or strange wording before they were added to libguides.

Once the descriptions were formatted correctly, the final step was adding them into the libguides A–Z List. Because we wanted to use bold text to highlight the title and sections of the new descriptions (Subjects, Description, Contents), we had to add some HTML formatting to the descriptions before adding them. For this, we created another small Excel spreadsheet that took the final output of the previous spreadsheet in plain text, to get rid of all of the previous formulas, and added a field that concatenated each section (database name, Subjects, Description, and Contents) with typical HTML formatting for bold headers. Contract staffers working in the library overnight then copied and pasted these HTML-formatted descriptions into the libguides A–Z List description boxes.

Wrap-up

Making database descriptions in libguides more student-friendly is important to help them use databases effectively in their research. It is also useful to customize libguides for your precise student population. This article discussed the process for developing and testing a new format for database descriptions, as well as the implementation process across all libguides. The same procedure could be used to fix other things in libguides, such as accessibility or heading elements. When your workflow includes a large group of liaison librarians, it is necessary to create a basic structure for your descriptions to achieve standardization. Also, it is important to spell out exactly how you want things such as date ranges and geographic locations formatted; otherwise, you will not achieve consistency. It is a challenge to build formulas to translate your multiple-choice elements into complete sentences. Finally, it’s a good idea to have a review team oversee the final product to control for small errors (such as lack of spaces or missing content) before it goes live. |