FEATURE

Linked Data in Libraries: Status and Future Direction

by Robin Hastings

| Librarians are in an excellent position to take the

data already contained in millions of catalog records and set it free to live on the web to be used in ways they might never have been able to previously imagine. |

With the introduction of RDA (Resource Description and Access) in cataloging and XML and Semantic Web technologies on the general web, the idea of creating links between data that is able to be understood by computers has been growing in popularity. Right now, the information found in both library catalogs and on the web is generally human-readable and understandable, but not optimized for computer understanding. Text is put up on the web without any kind of markup to give it structural and semantic meaning. This prevents the automatic linking of one informational bit to another. Fixing that issue is the focus of the Semantic Web in general and linked data in particular.

The larger Semantic Web is concerned with web-based markup that is specific and descriptive of the meaning of the text being marked up. Normal HTML is marking up strings of text without meaning. The goal is to move from markup of strings to markup of things—objects (mostly text strings right now, but other objects such as images and documents could be marked up semantically as well) that have meaning to the computer programs that parse the markup as well as the humans that consume the content. This is the ideal for the Semantic Web. The current way data is encoded for the web consists of strings of text that are difficult for computers to process. For example, HTML puts text up on the screen and gives browsers instructions on how to display it (as a paragraph or as bold text, etc.) but says nothing about the meaning of the text being displayed.

The new way to do things—via linked data in the Semantic Web—consists of granular objects marked up as “things” that can be processed programmatically by a computer. The goal for this way of marking up objects, rather than just having strings of text, will make information more accessible to computers as well as to humans—and make it far easier for computers to parse out and use the information posted on the web.

Definition and Explanation of Linked Data

There are several definitions of linked data in library literature. It’s variously described as a set of best practices required for publishing and connecting data that is structured in a way that machines can use it and also as the actual relationships between data objects—in a much more granular fashion than the current relationships or links between documents on the web that we have now—that is made readable by both machines and humans. In essence, linked data is the coding of data with semantic meaning for machine use.

In 2006, Tim Berners-Lee laid out the essential principles of linked data as:

- Using URIs (Universal Resource Indentifiers) as names for objects described

- Using HTTP URIs so that people can look up and access those names

- When someone does look up a URI, providing information using standards—RDF (Resource Description Framework), JSON (JavaScript Object Notation), and Turtle, etc.

- Including links to other objects (via URI) so that other objects can be discovered

These principles are what defines linked data. It is not a standard or protocol in and of itself, but rather the use of standards to provide meaning for computers.

|

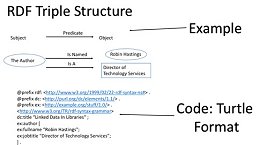

Figure 1: Linking data using triples |

|

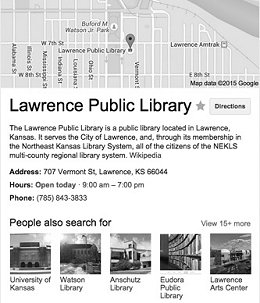

Figure 2: Linked data is behind Google’s Knowledge Graph. |

|

Linked open datasets published

through April 2014

Source Credit: CC BY-SA Linking Open Data Cloud Diagram 2014,

by Max Schmachtenberg, Christian Bizer,

Anja Jentzsch, and Richard Cyganiak.

lod-cloud.net

|

One of the standards commonly used in linked data projects is RDF, which provides a way for linking data using triples (see Figure 1). Those triples consist of a subject, an object, and a predicate—just like a simple English sentence. In the library world, a triple may provide information about a book, such as its publisher, in the form of, “This book (noun with unique URI) has as publisher (verb with unique URI) Big Publishing Company (object with unique URI).” These triples can be linked to one another through the use of URIs. A URI is something that can act as a permanent identifier for any object on the web. These two standards work together. URIs act as both the nouns and the objects used in the RDF triples and constitute the essential semantics of the linked data information. Other standards used in the creation and publishing of linked data include JSON and Turtle, which is a language created specifically for the use of linked data. The library projects introduced later in this article provide their data in all three of these standard notations.

Another standard that makes use of the principles of linked data is the recently adopted RDA cataloging standard. RDA is the next-generation cataloging standard that will, in time, replace human-readable MARC records with computer-readable RDA records. Both the Library of Congress (LC) and the British Library have been using RDA cataloging since 2013. The transition is already happening in many larger libraries. This standard can help libraries share information and data that they collect and organize with each other and with outside organizations that have need of it. By making it easy to define each RDA property as an RDF triple (as the previously mentioned example of the book’s publisher), RDA will also make the data currently hidden in non-semantic MARC record format more searchable and findable by general web searches. This will greatly expand the usefulness and reach of the data that libraries have traditionally made available only through non-semantic markup in HTML-based online catalogs.

Use of Linked Data in Libraries

Linked data is not yet widely used in any field, including in libraries. There are some barriers to libraries deciding to use it, including the relative complexity of the technology; the aversion to risk many libraries have; and economic, political, and system limitations. However, some fairly recent forays into linked data outside of libraries have shown that the technology can be useful. Both Google’s Knowledge Graph and Facebook’s Open Graph Protocol use linked data to, respectively, find related information in searches and connect people based on interests and common contacts. These projects both provide graphs of linked data that connect concepts in webs of meaning, as opposed to simple one-way links that are common in HTML marked up documents (see Figure 2). In the figure, you can see Google has pulled semantically relevant information about the Lawrence Public Library and put it in an information box that shows up alongside search results for this library. Things such as directions based on the library’s address, the library’s hours, and other information that Google was able to determine from the semantic markup used in the library’s website are all neatly presented for the searcher to use.

If the library uses linked data to present its materials on the web, a search for a book could bring up a similar graph, with information about the book and its availability in the library at the moment of searching. Links to the author and publisher and other information about the book would also be made available through the graph interface. Libraries already collect and manage this information—making it useful for searchers on the web is an obvious next step.

Governments are also using linked data, such as the U.S.’ data.gov site, which is converting data to RDF for others to use. According to Data.gov, there are more than 6.4 billion triples (aka information objects) available for use. This data is part of the linked open data cloud and is usable by anyone who wants to enrich his or her own datasets. Here are some examples of data collections:

- Local severe weather warning systems in Missouri

- Product recall data from the

- federal government

- Higher education datasets,

- including information on every institution of higher education that participates in the federal student financial aid programs

Libraries making connections with datasets that are open and freely usable like this will be a boon for those libraries as they make their data linkable via URIs on the web. One example of this could be a book (the noun in the triple) in the collection that has as (the verb) its publisher (the object) one of those institutes of higher learning included in the previously mentioned Data.gov dataset. Using the URI for the university that is shared by both the predicate in the material’s triple, a computer could find the dataset information linked to that same URI from Data.gov and bring in a rich source of data for the library’s material.

In the library world, there are a few initiatives that have begun to take shape. One of the first examples of linked data use in libraries is the Swedish Union Catalog, LIBRIS (libris.kb.se), which began sharing linked data in 2008. Other libraries using linked data include the British National Bibliography (bnb.data.bl.uk) and Open Library (openlibrary.org). Both use linked data to provide information on books and authors in various formats (RDF, JSON, and Turtle, etc.) for open reuse. They also have extensive records of books available. Open Library in particular has, as one of its goals, the provision of a record and, by extension, a webpage for every book published—ever.

The German libraries of the North-Rhine-Westphalian library network began to publish their data as linked open data (linked data that has no restrictions on use) in March 2010. That effort was subsequently expanded and created a service called Culturegraph1. This is a linked open data service that intends to create an individual information object for each kind of material held by libraries in Germany. The French National Bibliography has also developed a project that brings together catalog data in MARC format with archive data in EADs (Encoded Archival Description) format and digital resource data in Dublin Core format into a single data repository (data.bnf.fr). All the data from the French catalogs, archives, and digital resource projects are extracted and gathered automatically into that repository.

Future Directions

Since so few U.S. libraries have their data in linked data format yet, there is much to do to make library bibliographic data available for consumption on the Semantic Web. Libraries have, as their mission, the sharing and collection of information. To do that, they must have records that are discoverable and readable by computer programs, which is a primary goal of linked data. The LC is working on a linked data project that is still in progress at this time. BIBFRAME, announced by the LC in 2011, will be built with RDA and elements that are FRBR-like.

There are other, private projects in place as well. One of these—the Linked Data For Libraries project (LD4L; ld4l.org)—is a collaboration of Cornell University Library, Harvard’s Library Innovation Lab, and Stanford University Libraries. They are working with BIBFRAME and other projects to get linked library data out and available to libraries. Another collaborative project in the works here in the U.S. is the Libhub Initiative (libhub.org). It is in the beginning stage, but is already starting to gather data (MARC records, mostly) from partnering libraries and preparing to convert that MARC data to linked data.

The other projects that are already in place in Sweden, Britain, France, and Germany also have more to do and are still works in progress—although they have already begun publishing the data they have to date. With RDA and FRBR-ized data models appearing in libraries, the next obvious step is to use these models to create data that is capable of linking with other data on the web. With these technologies in place and some robust examples of linked data in European countries and individual organizations here in the U.S., libraries have a road map for creating and publishing their own linked datasets.

There are definite advantages to libraries using and sharing linked data. When data is made available in common linked data formats, libraries no longer have to reinvent the wheel by creating the same data over and over. It will be easy enough to link to common data about books and materials and add their own information (location and availability information, for example) on top of that common data. This will help libraries by saving time and money in re-creating data and make the information libraries provide more accessible to everyone. That kind of advantage may be enough to overcome the barriers to use that were mentioned previously.

Conclusion

A common sentiment, that those who write about linked data in libraries seem to share, is that libraries must be not only on the web, but of the web. They must switch their thinking from the old document-centric model to a more data-centric model, and linked data is one way to accomplish that. Librarians are in an excellent position to take the data already contained in millions of catalog records and set it free to live on the web to be used in ways they might never have been able to previously imagine. The services that are to come—if libraries provide their data in computer-readable formats on the web—are as likely to come from outside the library world as they are from inside. Many people who are not connected to libraries will find the data and be inspired to create services and applications that are useful and unique. Library data can also be incredibly useful as part of mashups of data on the web. And it can make data needed by a searcher on the web easy to find and more useful than the current strings of text that are generally found with web searches today.

With this all in mind, libraries should at least begin the process of considering how to transform their MARC data into the standards of linked data. Creating RDF triples and publishing the data they hold for everyone to use in linked open datasets will both benefit libraries by making their data more findable and accessible on the web and by providing a wealth of high-quality information for other institutions and individuals to make use of in new and exciting ways. There are already some MARC to RDF converters (w3.org/wiki/ConverterToRdf#MARC) in place, with more sure to come. Right now, the technological challenges to creating and publishing linked data are real, but the examples of other organizations—both library and non-library—doing it right now should be an incentive for libraries to begin the work. Librarians have a chance to get in front of this new technology and lead the way to the Semantic Web by adopting linked data (and linked open data) principles and converting their old document-centric information to data-centric objects that can be used by both computers and people to create new worlds of information on the web.

|