Vol. 20, No. 2 • February 2000

Vol. 20, No. 2 • February 2000 |

| • FEATURE

•

The Digital Atheneum: New Technologies for Restoring and Preserving Old Documents by W. Brent Seales, James Griffioen, Kevin Kiernan, Cheng Jiun Yuan, and Linda Cantara |

| “The manuscripts we are working with now date from approximately the 10th to the 11th centuries and are written primarily in Old English ..." |

We have observed that basic

problems of access faced by humanities scholars frequently make for daunting

technical challenges for computer scientists as well. In that spirit, the

Digital Atheneum project is developing leading-edge computer techniques

and algorithms to digitize damaged manuscripts and then restore markings

and other content that are no longer visible. The project is also researching

new methods that will help editors to access, search, transcribe, edit,

view, and enhance or annotate restored collections. An overall goal of

the project is to package these new algorithms and access methods into

a toolkit for creating digital editions, thereby making it easier for other

humanities scholars to create digital editions tailored to their own needs.

The Cotton Library Collection from

England

This great collection of

ancient and medieval manuscripts was acquired by the 17th-century antiquary,

Sir Robert Cotton, in the century following the dissolution of the monasteries

in England. His magnificent collection eventually became one of the founding

collections of the British Museum when it opened in 1753. Twenty-two years

earlier, however, a fire had ravaged the Cotton Library, destroying some

manuscripts, damaging many (including Beowulf), and severely devastating

others, seemingly beyond the possibility of restoration. The burnt fragments

of the most severely damaged, sometimes unidentified, manuscripts were

placed in drawers in a garret of the British Museum where they remained

forgotten for nearly a century. In the mid-19th century, a comprehensive

program was undertaken to restore these newly found manuscripts by inlaying

each damaged vellum leaf in a separate paper frame, then rebinding the

framed leaves as manuscript books. The inlaid frames kept the brittle edges

of the vellum leaves from crumbling away, while the rebinding of the loose

framed leaves as books prevented folios from being lost or misplaced.

The manuscripts we are working

with now date from approximately the 10th to the 11th centuries and are

written primarily in Old English, although some are written in Latin and

others, such as a Latin-Old English glossary, include both. One of the

manuscripts we are working on is a unique prosimetrical version (written

in both prose and poetry) of King Alfred the Great’s Old English translation

of The Consolation of Philosophy, a work by the Roman philosopher

Boethius that was later also translated by both Geoffrey Chaucer and Queen

Elizabeth. Other manuscript fragments in the group include saints’ lives,

biblical texts, homilies, the Anglo-Saxon Chronicle, and Bede’s Ecclesiastical

History of the English People.

The Nature of the Damage

Although the 19th-century

restoration was a masterful accomplishment, many of the manuscripts remain

quite illegible. Few modern scholars have attempted to read them, much

less edit and publish them. The inaccessibility of the texts stems primarily

from damage sustained in the fire and its aftermath, including the water

used to extinguish it. For example, in many instances, the scorching and

charring of the vellum render letters illegible or invisible in ordinary

light. Words frequently curl around singed or crumbled edges. Holes, gaps,

and fissures caused by burning obliterate partial and entire letters and

words. In some cases, the letters of a single word are widely separated

from each other and individual letters are frequently split apart. Shrinkage

of the vellum often distorts once horizontally aligned script into puzzling

undulations. And, of course, much of the vellum has been totally annihilated,

the text written on it gone forever.

In many cases, the earlier

attempts at preservation have themselves contributed to the illegibility

and inaccessibility of the texts. The protective paper frames, for example,

necessarily cover many letters and parts of letters along the damaged edges.

During the 19th-century restoration, some illegible fragments were inadvertently

bound in the wrong order, sometimes upside down or backwards, sometimes

even in the wrong manuscript. In other instances, multiple fragments of

a single manuscript leaf were misidentified and erroneously bound as separate

pages. Further damage was caused occasionally by tape, paste, and gauze

applied in later times to re-secure parts of text that had come loose,

or by chemical reagents applied in usually disastrous efforts to recover

illegible readings.

How We’re Restoring the Illegible Text

with Technology

Digitizing the manuscripts

makes it possible to restore the correct order of the pages and provides

improved access to them. However, even the best digital camera cannot restore

text that is hidden or invisible to the human eye. One focus of our work,

then, is on extracting previously hidden text from these badly damaged

manuscripts. We are using fiber-optic light to illuminate letters covered

by the paper binding frames, information otherwise hidden to both the camera

and the naked eye.

Here’s how: A page is secured

vertically with clamps and the digital camera is set on a tripod facing

it. Fiber-optic light (a cold, bright light source) behind the paper frame

reveals the covered letters and the camera digitizes them.

|

| Figure

1 -

Click to Enlarge |

Ultraviolet fluorescence is

particularly useful for recovering faded or erased text. Outside the spectrum

of human vision, ultraviolet often causes the faded or erased iron-based

inks of these manuscripts to fluoresce, and thus to show up clearly. Conventional

ultraviolet photography requires long exposure times and is prohibitively

expensive, time-consuming, and potentially destructive. We have found,

however, that a digital camera can quickly capture the effects caused by

ultraviolet fluorescence at its higher scan rate, thus eliminating the

need for long exposures. Image-processing techniques subsequently produce

images that often clearly reveal formerly invisible text. (See Figure

1.)

Reconstructing the Badly Damaged Manuscripts

The leaves of burned vellum

manuscripts rarely lie completely flat, in spite of conservators’ generally

successful efforts to smooth them out. Moreover, acidic paper was used

for some of the inlaid frames. Besides turning yellow, the frames sometimes

buckle and the vellum leaves shift. We are exploring digital ways of flattening

the leaves to take account of these three-dimensional distortions. One

potential technique attempts to recover the original shape of the manuscript

leaves by capturing depth dimensions. Depth information may help us determine

how the surface of a leaf has warped or wrinkled from extreme heat or water

damage, as well as the effects these deformities have had on the text itself

or indeed on the images acquired by the digital camera.

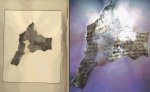

|

| Figure

2 -

Click to Enlarge |

Depth information may also

help solve the problem of accurately reuniting physically separate fragments.

During the 19th-century restoration, some fragments were correctly bound

together on the same page, but because of the medium could not be rejoined,

increasing the difficulty of reading the text. In Figure

2, a digitized image from preservation microfilm on the left shows

how two fragments of one page were separately bound together, while the

ultraviolet digital image on the right shows the same manuscript page with

the smaller fragment moved to its correct position in relation to the larger

fragment. Using a process called “mosaicing” in conjunction with depth

dimension, we are investigating the feasibility of creating transformations

that seamlessly rejoin such separated fragments.

Searching Images with Computational

Methods

Computers have historically

been quite adept at storing, searching, and retrieving alphanumeric data:

Searching textual documents, particularly when encoded with a standard

markup system like SGML (Standard Generalized Markup Language), can quickly

retrieve large quantities of specific information. Directly searching images

for specific content, however, presents major challenges. Unlike alphanumeric

letters or words, image content, such as a handwritten letterform, never

looks exactly the same. Consequently, a specified image must first be identified,

and the search must look for a region of an image that approximately matches

the specified image. Because searching images requires image matching and

processing, searching image data is far more computationally intensive

than searching alphanumeric data. To speed up searching, image data is

typically pre-processed to identify content that users are likely to seek

again. However, content that is likely to be of special interest depends

on the collection, so the search system must be easily configured to identify

collection-specific content.

We are developing a framework

for creating document-specific image processing algorithms that can locate,

identify, and classify individual letterforms. In some cases a transcription

may be incomplete or inaccurate because the letterforms are badly damaged

or distorted and therefore difficult to identify. Although no two handwritten

letters are ever exactly alike, the problem is greatly aggravated in the

case of damaged or distorted text. By analyzing several representative

letterforms, we hope to build computer models that can be used to perform

probabilistic pattern matching of damaged letterforms. Developing such

a system is prerequisite to our being able to identify fragmentary text

in these manuscripts.

|

| Figure

3 -

Click to Enlarge |

A transcription significantly

augments the search capability of an image-based digital edition. Linking

a transcription to the corresponding part of an image narrows the search

space and also assists an editor who’s struggling to decipher a charred

leaf. (See Figure 3.) For example, we know

that the lines of script were originally very uniform because the scribes

who wrote the manuscripts routinely scored guidelines directly into the

vellum before beginning to write the text. In the damaged manuscripts we

are using, some lines of script are still evenly spaced, but many others

are extremely distorted by the heat of the fire. Because keeping one’s

place when transcribing such manuscripts is difficult, we are exploring

techniques to facilitate linking a line of script in a manuscript image

with the editor’s textual transcription.

Editing and Annotating the Damaged

Manuscripts

Using these new processing

techniques that we’re developing specifically for scholars in the humanities,

our Digital Atheneum team plans to create and widely disseminate a digital

library of electronic editions of these previously inaccessible Cotton

Library manuscripts that we’ve digitally restored and reconstructed. As

aids to research, we also intend to provide structured information such

as electronic transcripts and edited texts, commentaries and annotations,

links from portions of images to text and from text to images, and ancillary

materials such as glossaries and bibliographies. We are encoding the transcripts

and edited texts in SGML to facilitate comprehensive searches for detailed

information in both the texts and the images, and are converting both the

transcripts and editions to HTML or XML so they can be displayed by Internet

browsers.

Another important application

we’re developing as part of this project is a generic toolkit to assist

other editors in assembling complex editions from high-resolution digital

manuscript data. The toolkit is being designed for scholars in the humanities

who would like to produce electronic editions, but do not have access to

programming support. An editor can then collect and create the components

of an electronic edition for any work (digital images, transcriptions,

edited text, glossaries, annotations, and so forth) and use the generic

toolkit to fashion a sophisticated interface to electronically display

or publish the edition. The increased ability to create electronic editions

will enable more libraries to provide access to previously unusable or

untouchable collections of primary resources in the humanities.

The Digital Atheneum’s Funding and

Support Tools

The Digital Atheneum is

funded by the National Science Foundation’s Digital Libraries Initiative

(NSF-DLI2) with major support from IBM’s Shared University Research (SUR)

program. The funding lasts until March of 2002, and although our team hopes

to have the project completed before then, there are no guarantees with

this kind of work. The British Library is providing privileged access to

the manuscripts in the Cotton Collection as well as to curatorial expertise

and its digitization resources. Much of the work on the Digital Atheneum

is being conducted in a new collaboratory for Research in Computing for

Humanities (RCH) located in the William T. Young Library at the University

of Kentucky.

The five authors are the principle investigators for the Digital Atheneum: W. Brent Seales (Ph.D., Wisconsin) and James Griffioen (Ph.D., Purdue) are associate professors of computer science; Kevin Kiernan (Ph.D., Case Western Reserve) is a professor of English. Cheng Jiun Yuan is a doctoral student and research assistant in computer science, and Linda Cantara (M.S.L.S., Kentucky) is a master’s student and research assistant in English. All of them work at the University of Kentucky in Lexington. The Digital Atheneum Web site is http://www.digitalatheneum.org.

| • Table of Contents | • Computers In Libraries Home Page |